If you’ve tried prompt-only AI video tools, you know the pain: great results sometimes, but motion consistency can feel like a lottery.

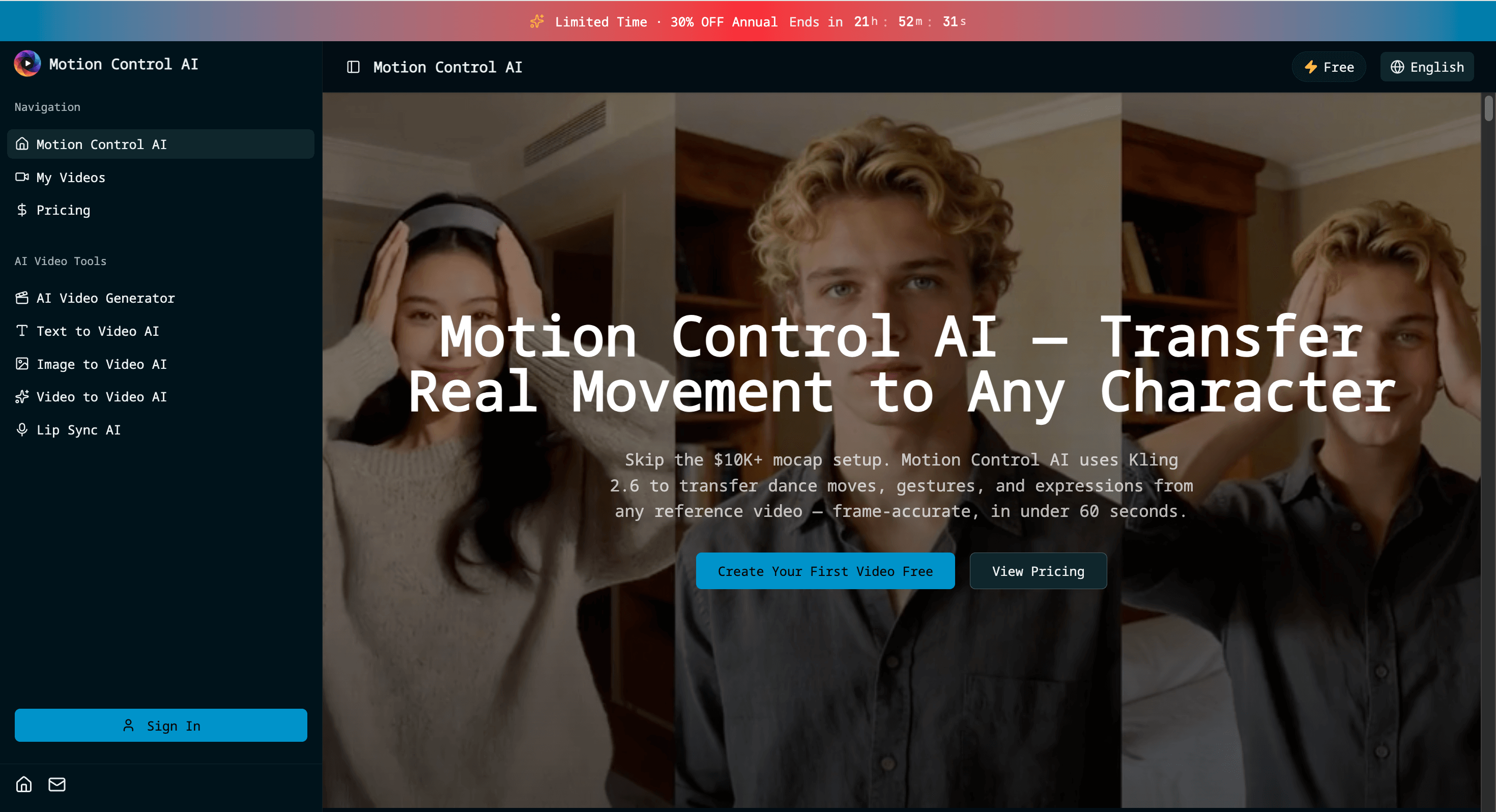

I built Motion Control AI to be control-first: you guide generation with reference motion (a source clip) so character movement is more predictable and repeatable across takes.

What it’s good for

• Creating character-driven short clips for TikTok/Reels/Shorts

• Making multiple ad creative variations without the motion drifting every time

• Quick animation prototyping (story beats, movement tests, concepts)

• Iterating fast: upload → generate → tweak → export

Why reference motion matters

Instead of trying to “describe motion” in text, you can show it—then generate variations that keep the movement direction you want.

🔗 Try it here: Motion Control AI

If you test it, I’d love feedback:

What’s your biggest frustration with AI video today—motion control, character consistency, or editing workflow?

Top comments (0)